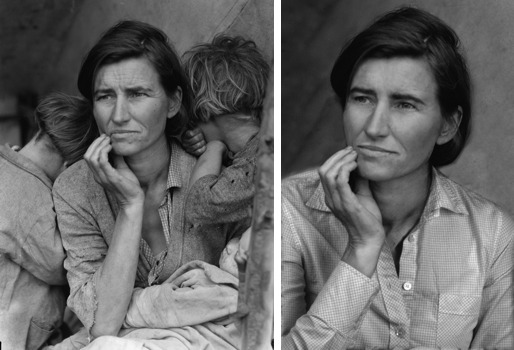

My apologies to the descendants of Dorothea Lange and the family of Florence Owens Thompson, of blessed memory, for twice defacing the most famous photo of the Depression. I hope you’ll agree, after reading this, that it was done with noble intentions.

Everyone’s talking about AI. Is it a useful tool, or could it be used for evil? The answer is: Yes. To prove this point, I deliberately ruined a historic photo using Generative Fill, a recently-introduced AI-based image editing tool, to show how it can be used the wrong way. It’s not the first time I’ve been involved in the defacement of Dorothea Lange’s “Migrant Mother”…

(Also see more practical examples of how AI can be used to restore old photos here.)

First, a flashback.

It all started in 2005, as an April Fool’s hoax. I was the managing editor of Popular Photography magazine, and along with my editor, John Owens, had an idea for a prank to pull on our readers in our April issue. It’s something we tried to do, with varying success, every year. We decided to parody one of our regular features, “The Fix”, in which we would use Photoshop to illustrate how to improve imperfect reader-submitted photos.

The original defacement: Migrant Mother by Dorothea Lange, before and after we Photoshopped it to death, as it appeared in the April 2005 issue of Popular Photography magazine. We got letters.

This time, we chose three iconic, historic images by well-known photographers, pretend they were bad, and then “fix” them. We made sure the changes were ridiculous. Doc Edgerton’s photo of a bullet shot through an apple? Put it on top of somebody’s head! Walker Evans’ photo of two-story homes overlooking a graveyard, with a steel mill behind it? Too distracting—just crop into one of the windows.

But the one that caused the most controversy was Dorothea Lange’s Depression-era photo of migrant worker Florence Owens Thompson and her children in a tent, looking overwhelmed with worry, in tattered clothes, her children leaning against her for support in those awful times. John and I agreed: This was a perfect target. We roped our resident Photoshop wiz, Debbie Grossman (who now works for Adobe), to make our plan a reality.

So, with John and I doubled over in laughter as Debbie did the actual work on a publicly available hi-res image we got from the Library of Congress, we got rid of the kids, bobbed Mrs. Thompson’s hair, got our similar body-type assistant Art Director to wear a nice Gingham blouse and superimposed that image over Mrs. Thompson’s tattered rags. Debbie used Photoshop’s tools to turn the frown into a smile and smoothed out her worry wrinkles.

The image editing process took a couple of hours, plus the time it took to photograph our assistant Art Director modeling the blouse and matching the lighting.

The subtext was a commentary on the mindless application of Photoshop’s capabilities to transform photos. Perhaps a bit of humor would help people think “whoa—let’s pull back and not over-use this new technology.” (Spoiler: It didn’t.)

We expected and got letters: Hundreds of rants, hate mail, and excommunication threats. Stuff like: “downright heresy,” “disrespectful and ugly,” and “I can’t believe you’d stoop so low.” Of course, when I responded that it was an April fool’s joke, most folks responded with: “Wow, you really had me going” and “Is that a big laugh I hear coming from Dorothea’s grave?”

Flash-Forward: Meet Generative Fill

A few months ago, Adobe announced Photoshop 24.5, including a Beta version of a new AI feature, Generative Fill. It allows you to select an area of a photo, then, drawing on a database of existing photos, it fills the space with…something newish. In the photography community, it’s the talk of the town. I’m hearing everything from “This changes everything” to “photography has been killed by AI” to “What a cool and useful feature that will make my life easier when fine-tuning my photos.”

It’s been a few years since I stopped working for photo publications and websites, in order to run a full-time freelance photography business in New Jersey. I’ve had no regrets, but sometimes, something comes up, and I wish I had that platform to share my thoughts. The arrival of photo-realistic AI imaging is one of those moments. So instead of Pop Photo, I’m here. I admit, I’m using the audacious changes made to the Migrant Mother to get more interest, but I hope you’ll forgive me because I’m hoping to add something useful to the ongoing conversation.

Before downloading and experimenting with Adobe’s beta release, I had a few concerns: The AI was using/adapting/transforming images from somewhere…where? Will the original photographers be acknowledged? Will they be compensated for the use use of their images? I shared these concerns with certain YouTubers who were providing breathless first-look examples of the software in action.

Fortunately, I was pointed to Adobe’s website; the original source images used in AI composites will only be drawn from Adobe Stock, a database of over 300 million files according to Adobe. In the release version, photographers will be attributed in the Metadata, with a backup in the cloud in case some nefarious party decides to strip out the information. Adobe also promises compensation, albeit very little. In the past, compensation for non-commercial use of images has been in the neighborhood of 90 cents and I don’t think that will change much. For a photographer who’s trying to make a living, that’s less subsistence than Florence Owens Thompson got, but if photographers agreed to these onerous terms, that’s on them.

(The killing of stock photography as a money-making proposition is another subject entirely.)

Anyway while the compensation aspect is flawed, I do applaud Adobe for leading the industry in developing technology to identify and acknowledge original content creators whose work is being incorporated into their AI-generated images.

Let The Defacement Begin

With those concerns eased, I got down to the business of using the latest technology to again deface the Migrant Mother. Same directions: Glam her face, lose the kids, fix her hair, replace the shirt, remove her worry lines and turn her frown upside down. And for good measure, replace the background.

And here it is.

When we did this at Pop Photo in 2005, it took about 4 hours of people power. When I did this on my own with Generative Fill, it took about ten minutes. And, some might argue, the results were even better. Yes, there are “tells” here and there. Her eyes are different. I’m not sure what’s going on in her neck. Her right sleeve is doing some weird things. The replaced skin is less grainy than the original (I added a layer of grain-like digital noise to even that out.)

I know, the image reproduced here is rather small. I did that purposely…I’d hate for this to go viral in high resolution. Leave that honor for the real version.

So, What Have We Learned?

- Just because we can do something thanks to the latest technology, it doesn’t mean we should.

- On the other hand, the latest advancements in technology will make it possible to restore images that were once considered beyond repair. As someone who restores old images, I think that’s good news.

- As photographers and restorers, we need to be aware of the latest tools and use them appropriately.